Native JSON Output From GPT-4

When integrating LLMs into your products, you often want to generate structured data, like JSON. With the help of function calling (released June 13th 2023), this process has become much simpler!

In this post, I will explore the new API.

Function Calling

Function calling allows GPT to call a function instead of returning a string. At the time of writing, this feature is available for the chat models gpt-3.5-turbo-0613 and gpt-4-0613.

For this feature, two new parameters have been introduced in the Chat Completions API:

functions: A list of functions available to GPT, each with aname,descriptionand a JSON Schema of theparameters.function_call: You can force GPT to use a specific function (or explicitly forbid calling any functions).

I realized that by setting the function_call to a specific function, you can reliably expect JSON as the response from GPT calls. No more strings, yay!

Let's see it in action with a demo app, Recipe Creator.

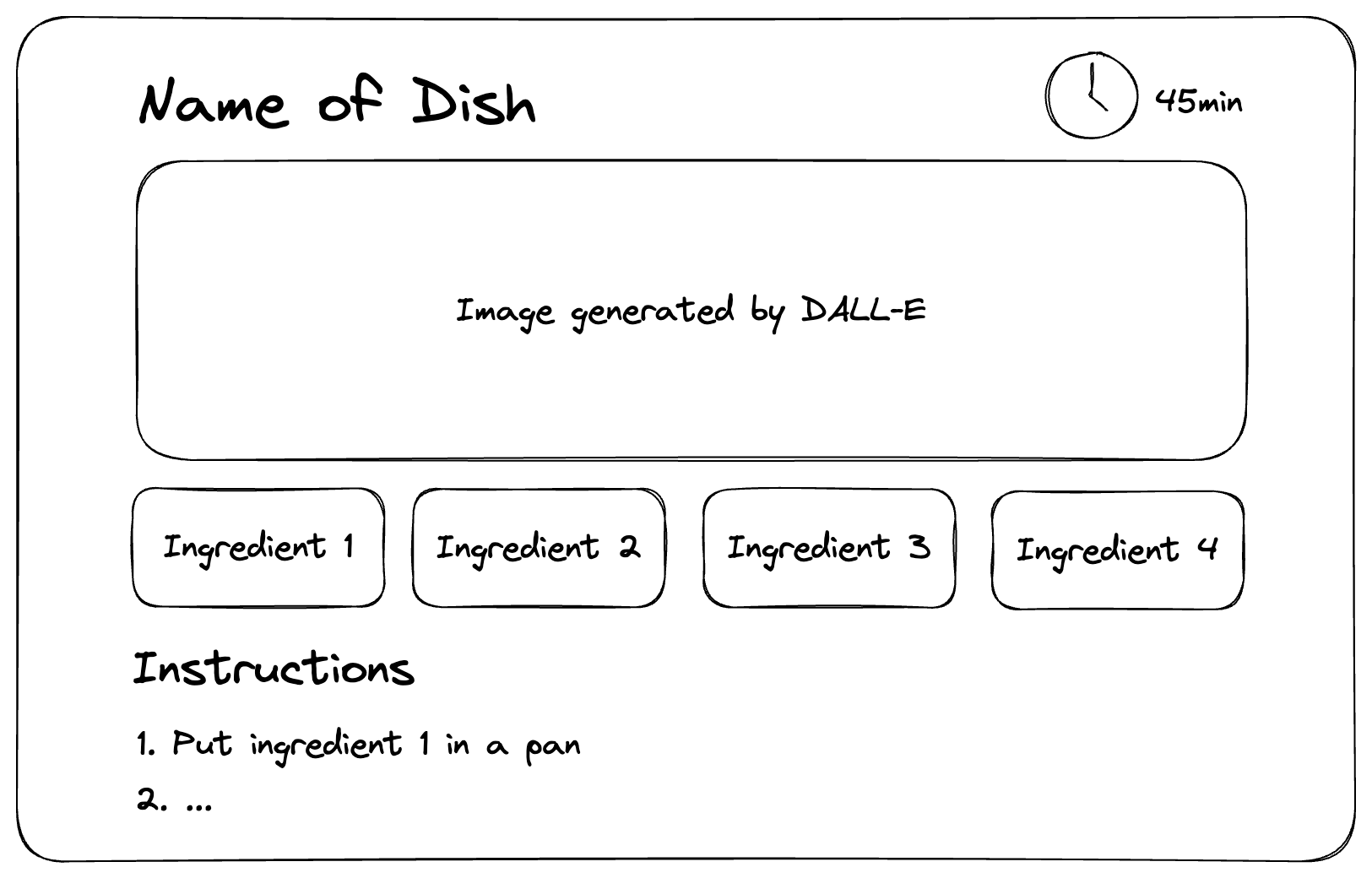

Recipe Creator

Recipe Creator is an app where the user inputs the name of a dish and is provided with instructions on how to cook it. Of course, it can be used to generate recipes for completely fictional dishes; if you can name it, there’s a recipe for it!

Our frontend developer has asked us to create a backend API which returns a JSON like this one:

{

"ingredients": [

{ "name": "Ingredient 1", "amount": 5, "unit": "grams" },

{ "name": "Ingredient 2", "amount": 1, "unit": "cup" },

],

"instructions": [

"Do step 1",

"Do step 2"

],

"time_to_cook": 5 // minutes

}

Let’s get started.

JSON Schema

First, let’s create a JSON schema based on the example dataset.

schema = {

"type": "object",

"properties": {

"ingredients": {

"type": "array",

"items": {

"type": "object",

"properties": {

"name": { "type": "string" },

"unit": {

"type": "string",

"enum": ["grams", "ml", "cups", "pieces", "teaspoons"]

},

"amount": { "type": "number" }

},

"required": ["name", "unit", "amount"]

}

},

"instructions": {

"type": "array",

"description": "Steps to prepare the recipe (no numbering)",

"items": { "type": "string" }

},

"time_to_cook": {

"type": "number",

"description": "Total time to prepare the recipe in minutes"

}

},

"required": ["ingredients", "instructions", "time_to_cook"]

}

API call

Now, let’s call the OpenAI API and pass the JSON schema defined above:

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY")

completion = openai.ChatCompletion.create(

model="gpt-4-0613",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Provide a recipe for spaghetti bolognese"}

],

functions=[{"name": "set_recipe", "parameters": schema}],

function_call={"name": "set_recipe"},

temperature=0,

)

print(completion.choices[0].message.function_call.arguments)

Here’s the result of running the above code: recipe for spaghetti bolognese

{

"ingredients":[

{"name": "spaghetti", "unit": "grams", "amount": 400},

{"name": "ground beef", "unit": "grams", "amount": 500},

{"name": "onion", "unit": "pieces", "amount": 1},

{"name": "garlic cloves", "unit": "pieces", "amount": 2},

{"name": "carrot", "unit": "pieces", "amount": 1},

{"name": "celery stalk", "unit": "pieces", "amount": 1},

{"name": "canned tomatoes", "unit": "grams", "amount": 400},

{"name": "red wine", "unit": "ml", "amount": 125},

{"name": "olive oil", "unit": "ml", "amount": 30},

{"name": "salt", "unit": "teaspoons", "amount": 1},

{"name": "pepper", "unit": "teaspoons", "amount": 1}

],

"instructions": [

"Heat the olive oil in a large pan over medium heat. Add the finely chopped onion, carrot, celery, and minced garlic and cook until softened.",

"Add the ground beef to the pan and cook until browned.",

"Pour in the red wine and let it simmer until the alcohol has evaporated.",

"Add the canned tomatoes, salt, and pepper. Reduce the heat to low, cover the pan, and let it simmer for about 1 hour, stirring occasionally.",

"In the meantime, cook the spaghetti in a large pot of boiling salted water according to the package instructions until al dente.",

"Drain the spaghetti and add it to the pan with the Bolognese sauce. Toss well to combine.",

"Serve the Spaghetti Bolognese with a sprinkle of freshly grated Parmesan cheese on top."

],

"time_to_cook": 90

}

Perfect! 🧑🍳

Implications

I believe the new API will change the way we interact with OpenAI LLMs beyond the obvious use-case of plugins.

This is different from prompt engineering

You could already generate JSON output with the help of prompt engineering: You put some JSON examples as part of GPT’s context window and ask it to generate a new one (few-shot prompting).

This approach works well for simple cases but is prone to errors. GPT makes simple mistakes (like missing commas, and unescaped line breaks) and sometimes gets completely derailed. You can also intentionally derail GPT with prompt injection.

This means that you need to defensively parse the output of GPT to salvage as much usable information as possible. Libraries like Langchain or llmparser help in this process, but come with their own limitations and boilerplate code.

With lower-level access to the large language model, you can do much better. I don’t have access to GPT4’s source, but I assume OpenAI’s implementation works conceptually similar to jsonformer, where the token selection algorithm is changed from “choose the token with the highest logit” to “choose the token with the highest logit which is valid for the schema”.

This means that the burden of following the specific schema is lifted from GPT and instead embedded into the token generation process.

[Edit: OpenAI warns that the model may generate invalid JSON. They might not be using jsonformer’s approach after all.]

Lower token usage

The example above used 126 prompt tokens and 538 completion tokens (Costing $ 0.036 for GPT4 or $ 0.0012 for GPT 3.5).

If you were to use few-shot learning to get the same results, you would have needed more prompt tokens for the same task.

Lower token usage means faster and cheaper API calls.

Less cognitive load on GPT

The more things you ask of GPT at the same time, the more likely it is to make mistakes or hallucinations.

By removing the instructions of following a specific JSON format from your prompts, you simplify the task for GPT.

My intuition is that this increases the likelihood of success, meaning that your accuracy should go up.

Furthermore, you might be able to downgrade to a smaller GPT model in places where the JSON complexity made it otherwise infeasible and gain speed and cost reduction benefits.

Natural language to structured data

I was surprised by the little amount of code needed to build the recipe example. Doing something like this used to take far more boilerplate code in my previous attempts without function calling.

It is very cool that you can “code” an “intelligent” backend API in natural language. You can build such an API in a few hours.

LLMs as backends

While most examples from OpenAI present functions as a step towards the goal of answering the user’s query in a chat context (plugins); the recipe example here shows that you can use functions as a final target.

An example could be Excel allowing users to define new buttons for the toolbar by explaining the button's functionality in plaintext. The prompt gets fed to GPT and the response is coerced to be a valid Excel API call.

Another example would be no-code prototyping tools, which could leverage LLMs to let their users define button functionality.

The fact that you no longer need prompt engineering to generate correct output makes it easier to use LLMs as no-code backends.

Running an LLM every time someone clicks on a button is expensive and slow in production, but probably still ~10x cheaper to produce than code, and accessible to larger groups of people.

Thought processes as JSON Schema

You can do Chain of Thought Prompting or even implement ReAct as part of the JSON schema (GPT seems to respect the definition order of object properties).

OpenAI’s API seems to support JSON Schema features like #ref (recursion) and oneOf (multiple choice); meaning that you should be able to implement more complex agents and recursive thought processes via JSON schema in a single API request (as long as it fits in the context window).

This means that you can embed complex strategies into a single API call which makes your agents run faster and consume fewer tokens (since you don’t pass the same context across multiple API calls).

Not every JSON Schema feature is supported (if/else seems to be ignored, as are consts), but I do wonder if the supported features are enough to turn the schema language into a Turing-complete one.

Simon's Blog

Simon's Blog